table of contents

This post introduces core aspects of "Diffusion Pilot" as a tool and methodology, and assumes you have rudimentary knowledge of Stable Diffusion. For more in-depth dev-blogs and details on the process look for level 3 posts, or to understand the "why" of it all, dig into other essay posts.

Scheduled animation machines

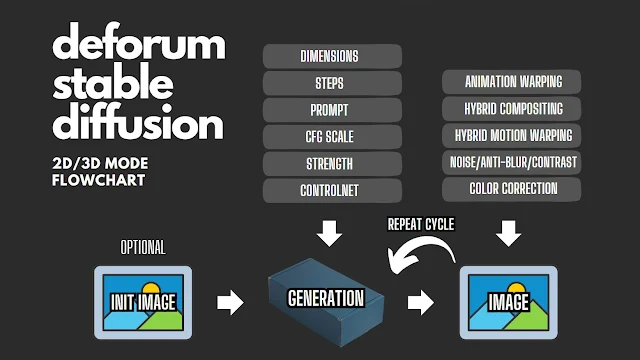

Advanced animation with Stable Diffusion (SD) at the moment usually requires one to interact with interfaces that feel a lot like these:

Heavy boxes of deeply wired computational machinery, taking in data through punched cards or tape, then beep-booping a lot inside until finally printing out the result.

You see, most AI animation applications started out as Google Colab (Jupiter) notebooks, which consist of blocks of code, and some comments if you're lucky. While being good for quick prototyping, developing, and sharing, that kind of environment does not transition well into tools for art. Like those bulky old computers, you "punch in" some parameters and a prompt, aka "scheduling", then wait for the final result, tediously iterating your inputs after each run. So while digital art in general has put ease of iteration at the forefront of art's process, a machine of naked code like this burdens this principle, being better suited for precise data and educated planning.

This naked code soon got wrapped into user interfaces, either by the open source community in these case of A1111 Webui and Deforum, or by commercial startups like Kaiber. The latter differs only by hiding away most of the dials for the sake of simplicity, sacrificing control. I'd say this only goes as far as making the "punch in" process prettier and more structured, but it is not any more attractive to those seeking more of a hands-on creative approach.

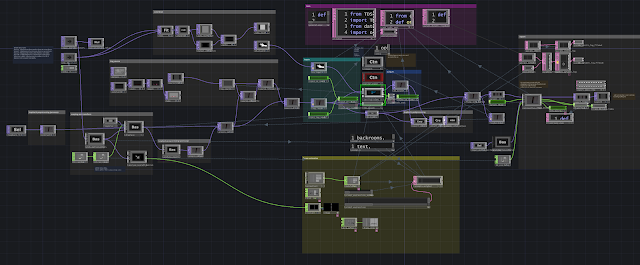

What if animating with Stable Diffusion felt more like this?

and this?

I know, right? It looks hellishly complex, more than the IBM 650. I am not simply making an argument for simplicity though. Allow me to make a proposition...

"piloting" is the new scheduling 🚀

...okay, by the word "piloting" I don't only mean flying a hypothetical diffusion-spaceship, although I do think that's kind of cool.

It's to express how approaches leaning into modularity, live improvisation, and artistic agency could serve to demystify the process of generative frame-by-frame AI animation, expand its potential as an artistic medium, and introduce alternative, deeper ways of working with it as a tool. The expression "piloting" embodies these principles. It caters to creators who seek control and closer contact with the medium, even if their work is experimental, where scheduling a giant box of code doesn't quite cut it.

Luckily, we have been seeing more and more efforts that lean in this direction, and here I will present the core building blocks of my own take on it by the name of "Diffusion Pilot", a toolkit currently in development. While this is more of a summary, subsequent posts will delve deeper into its technical specifics.

Frame-by-frame spirit

Until recently, most "made by AI" videos were done with generative (static) image diffusion models frame-by-frame, whereas true video-native methods like Runway's Gen2, or AnimateDiff emerged and have grown popular much later. See my overview of generative AI animation techniques if you want to understand the distinction.

While video models are rapidly improving, the catch is that for animation (as an art form), "frame-by-frame" is probably where the most interesting and granular tool-like interaction can happen (made with/through, not by AI). While AI provides one image after another, the artist is empowered to animate it through gradual change such as zooming, panning, adjusting prompts, parameters and conditions.

The frame-by-frame process is at the heart of the tradition of animation, where inherently static mediums are stitched into illusions of motion and life. My point - "AI animation" can be that too.

This principle directly supports and enables the rest of the foundational aspects of "piloting" discussed further on. Simply put, it would be vastly less accessible to "pilot" a video model at the current time.

Traditional animation workflow continuously builds upon each last frame, producing conscious and directed flow of motion and intent, whether its cell animation, or stop-motion. It's probably best illustrated by oil-on-glass technique though, where each frame, paint is overlaid on top of previous frames layer-after-layer.

Weirdly enough it can serve as an analogy to how animating with Stable Diffusion involves sending frames back into it through a feedback loop, making it observe its own outputs and generate "on top" of previous images through adding and removing latent noise.

I only wish I saw more imaginative techniques using Stable

Diffusion, but I suspect few sustain their interest through all the

controversy and intimidating punch card schedule machines that are the

current applications. It has potential to be way more than a passing TikTok gimmick. And the latest and greatest video generators usually only reinforce the "punch-card" habit of working, often behaving like a toy version of that, designed to minimize labor, involvement, and barriers of entry, especially when offered as a paid service.

Standing on the shoulders of Deforum

Most of the "wheel" for animating with AI frame-by-frame is already invented, with the most extensive toolkit for it right now being the amazing open source effort of Deforum. I didn't know that initially though.

When I had just started learning about animation with SD, I had my hopes on "Dream textures" in Blender, because I am making the "skeleton" of my film through conventional 3D. Their "render engine" update did an outstanding job with ControlNet support (which is essential for complex workflows), but it fell short by having nothing to facilitate temporal consistency and continuity of motion frame after frame.

The aspect of temporal consistency is well explored and discussed across the community of AI animation tinkerers, again, overviewed in my article on AI animation in general. It's at the core of toolkits such as Stable WarpFusion, at that time limited to Patreon supporters of its creator...

...and Deforum.

"pff, that thing that makes the trippy infinite zoom videos? Everybody is already tired of that."

...I thought to myself.

I was wrong. Unlike most of its content would lead you to believe, Deforum has wider potential, and despite it being that kind of cumbersome schedule-and-wait machine, I was ready to use it. However, while it is well equipped to work on generic video material, I had my eyes set on optical flow warping with motion vectors exported straight from 3D software, which was not possible at the time.

Feeling teased by the potential, but unable to try my conceived 3D - SD workflow, I either had to nag various developers to expand their tools, or derive my own solutions from the strong foundation of established tools like Deforum.

In fact, Deforum is but one of many giants

that form this growing tower of advancements upon which shoulders I stand. The vast

majority of current progress is enabled by the open source nature of Stable Diffusion itself, and its DIY community is in a perpetual state of communal evolution. Looking even further back into its roots, you may also discover glaring ethical issues with the datasets that train these models. However, having it open source as a kind of "common good", unlike something like DALL·E or Imagen, is perhaps the only way one could make some arguments to defend it.

Modularity, modulation, and the power of open source

Open source means that nothing is a black box, anyone is able to learn, dissect, and derive their own twists and hacks from the existing pool of techniques. After what must have been one of my happiest google search results ever, I

discovered TDDiffusion, an implementation of Stable Diffusion

through a locally ran API inside TouchDesigner (TD), which is a powerful toolkit for intersection of arts and technology. This is where my own path of hacking and developing began.

Combining software and working through APIs is the bread and butter of software developers and DIY hacker culture. To me though, an artist with only half-a-foot inside tech and coding, having such direct, hands on access to SD inside the limitless (and quite accessible) TouchDesigner is a game changer. It opens doors into modular, deep, and endlessly customizable animation process, and I'm developing Diffusion Pilot as a functioning proof of that.

By itself, TDDiffusion works like a generic image generator, generating one at a time through "txt2img" or "img2img" modes. At the heart of tools like Deforum though, usually lies an img2img feedback loop, that advances frame-by-frame, and that's where the fun begins. Besides replicating this established blueprint for SD animation, once I desire something custom, my hands are untied to hot-swap anything in the pipeline, routing inputs and outputs of anything to anything, importing and exporting anything, anywhere. TD can also be trusted to handle all essential image (TOP) operations in an optimized manner on the GPU, with extensive parameter modulation capabilities and Python expression engine. Most importantly, it is inherently a toolkit for live setups, so scaling into real-time applications can potentially be seamless. My point is...

If Stable Diffusion was an audio synthesizer, TouchDesigner is now the best way to build a modular synthesizer rack around it.

While this is my story, I hope this example inspires others to look into such opportunities and tinker with their own arrangements of this tech. What about styling live camera feed with SD? or generating live VR environments with SD? or creating audio reactive visuals with SD? or using real time hand tracking to cast fire:

Learned a new trick !🔥👋🧪

— Lyell (@dotsimulate) January 5, 2024

Take a peak at another #streamdiffusion sketch. Made using @1null1 + @tBlankensmith’s amazing mediapipe tool for hand tracking #realtime #ai pic.twitter.com/kkBudpl5o5

Going one level deeper, ComfyUI is a way to approach the underlying diffusion process itself in a similar modular, customizable fashion. It too can be hooked up into TD for frame-by-frame processing. A handy component is also available from the same author, although the most cutting edge SD implementations in TD are done by dotsimulate, who shares, maintains, and updates his suite of components and guides through his Patreon.

Live improvisation and reactive control

Although not my initial goal, the ability to "pilot" the live act of generating image after image became my most cherished discovery, since TouchDesigner is well designed for live applications such as installation art. The name of this blog only proves my enthusiasm.

Initially, I set out to make myself a tool that functions in a linear timeline fashion, mimicking the usual predetermined rendering process that advances frame-by-frame in video editing software. However, making new API requests through TDDiffusion each frame, meant that all parameters can be continuously adjusted by the user or through automation, without the need to "schedule" anything. This is a huge deal for animation as a process, and a pivotal moment in my research and experimentation.

This places the generative image model and the artist in an active, continuous dialog.

An obvious opportunity that comes from this is to live "steer" the imagined camera movement as the model is generating animation frames (as seen in my earlier video example as well), which is an idea that I was definitively not the first one in having.

With all this, it's tempting to call this "VJing" Stable Diffusion, especially when model inference is rapidly getting more optimized, and people have found workarounds to fake a realtime effect, such as my own attempt here.

One of the foundational works for meaningful and artistic live interactivity with deep learning technology is series of work called "Learning to see" together with the related PhD paper "Deep Visual Instruments: Realtime Continuous, Meaningful Human Control over Deep Neural Networks for creative expression".

It primarily focuses on GANs, which might make you yawn in 2024, but it's at least worth a look for inspiration, as the thought process and concepts laid out there are as relevant as ever.

Artistic agency and deep conditioning

Everything written so far had to do with control - artists taking control of these tools. Another reason Stable Diffusion is at the center of this writing is the widespread adoption of the ControlNet architecture and its successors such as IP-Adapter or T2I-Adapter.

This is brilliant stuff for all the control freaks out there, and if my vision was limited to the image that is static, I'd stick to using a SD plugin for Krita instead of going through so much trouble and writing this blog.

Even in

animation, to me it's not quite about giving up work. As

mentioned previously, the foundation for my initially envisioned workflow was

conventional, manual 3D, serving as a "skeleton" that supports the final look,

which is formed through neural network aesthetics that come from using AI. I

had in mind this kind of synergy way back in the early stages of my film, before SD or ControlNet was even a thing.

Such tight 3D conditioning, together with complementary techniques such as optical flow warping, can enable to direct and stage scenes, composition, camera movement, and lighting through the use of 3D software, then use SD to "paint" it frame by frame. With "live improvisation and reactive control" you can also watch over its shoulder as it is generating and continuously direct it. Don't worry, it doesn't have feelings yet. It also doesn't need to be 3D, as most types of ControlNet and similar conditioning can work with 2D techniques just as well.

However, the dominant usage of conditioning such as ControlNet in animation that I see online

revolves around making glorified video filters, transformations of style:

"behold internet! I have turned myself into anime, and you can too

with the power of AI".

Another promised land is to replace conventional CGI altogether. Lucky for me, I'm only in it to explore new frontiers

and make painterly, psychedelic, dream-like film. All the "wrong" that might

come from this is the subject, the aesthetic, the parts that's interesting to me.

For those trying to perfect their dancing anime waifus, I'm afraid the kind of

animation I'm describing in this blog post is not that:

I transform real person dancing to animation using stable diffusion and multiControlNet

byu/neilwong2012 inStableDiffusion

...which brings me to

The content (bonus)

What anyone creates with open source tools is their business, and I don't think there's much to gain from pointing fingers and lecturing each other on what's "the real shit". I do think though, that some overly generalized labels are still somewhat stuck on "AI animation" as a whole, and that some people get overly fixated on either following along (replicating), or writing it off as a gimmick, leading to a skewed perception of what's possible and if there are proper tools at all.

What I hope was obvious with this post, is that there potentially is a strong artistic tool and medium potential with animation through Stable Diffusion, that can be used for way, way more scenarios and styles than what an average TikTok feed might lead you to believe. Stylizing dance videos and the quest for "temporal consistency" is a temporary one, with new papers and techniques overtaking each other every day, as methods to work with video are generally shifting to video-native neural networks and enhancements. Frame-by-frame techniques, like Deforum, will be there to stay though, maybe eventually called "old-school AI", holding some special charm unique to its medium, in contrast to where most AI developments are headed to - complete hands-off approach to creativity, with everything getting served to you on a personalized, yet generic plate.

Presenting the core building blocks of Diffusion Pilot - a deeper way of animating with and through Stable Diffusion.

Presenting the core building blocks of Diffusion Pilot - a deeper way of animating with and through Stable Diffusion.